Prompt Studio

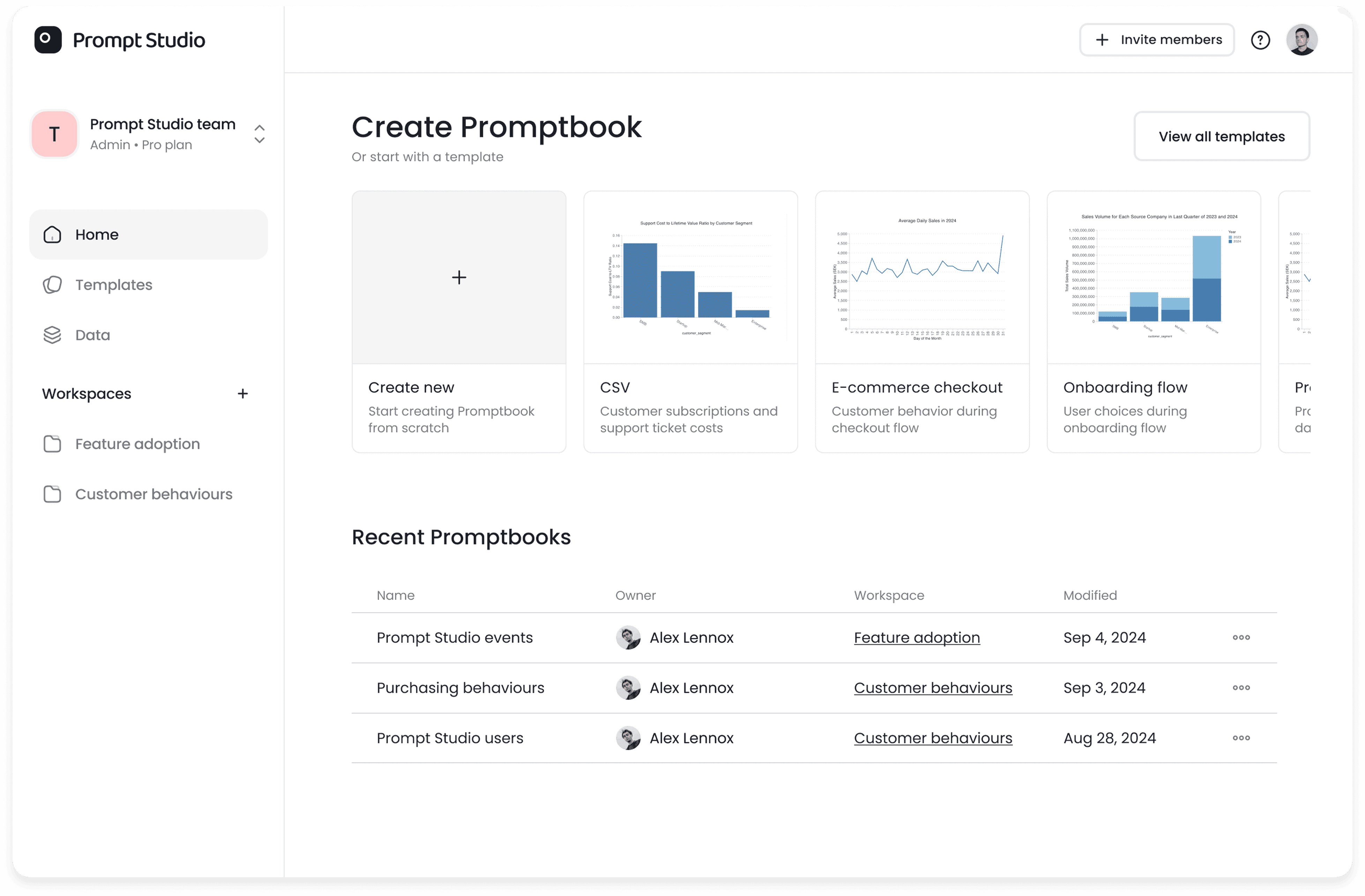

Your fastest path to meaningful insights

Empower your team with self-service analytics, go from raw data to impactful decisions - all within a collaborative AI-powered workspace.

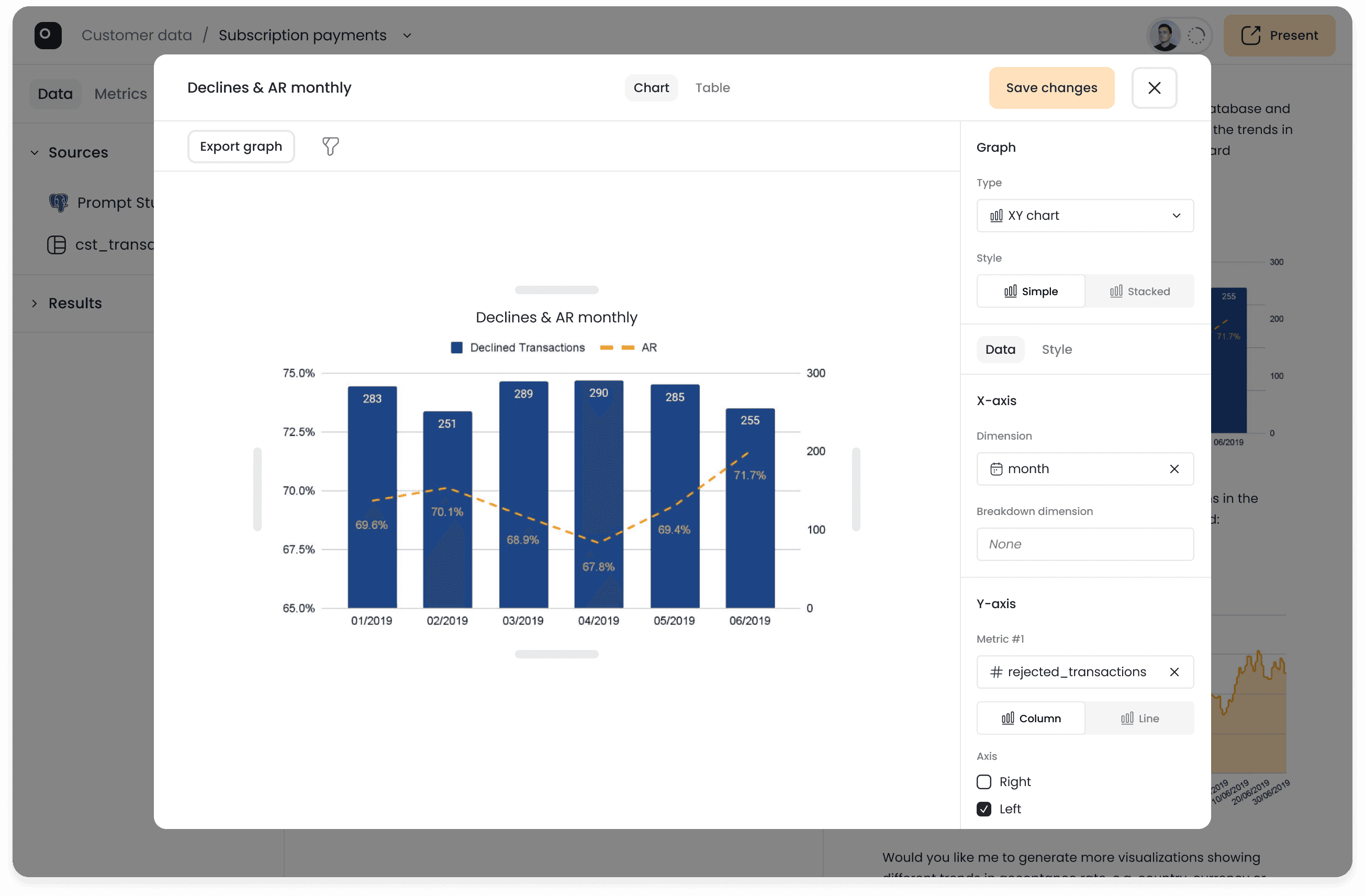

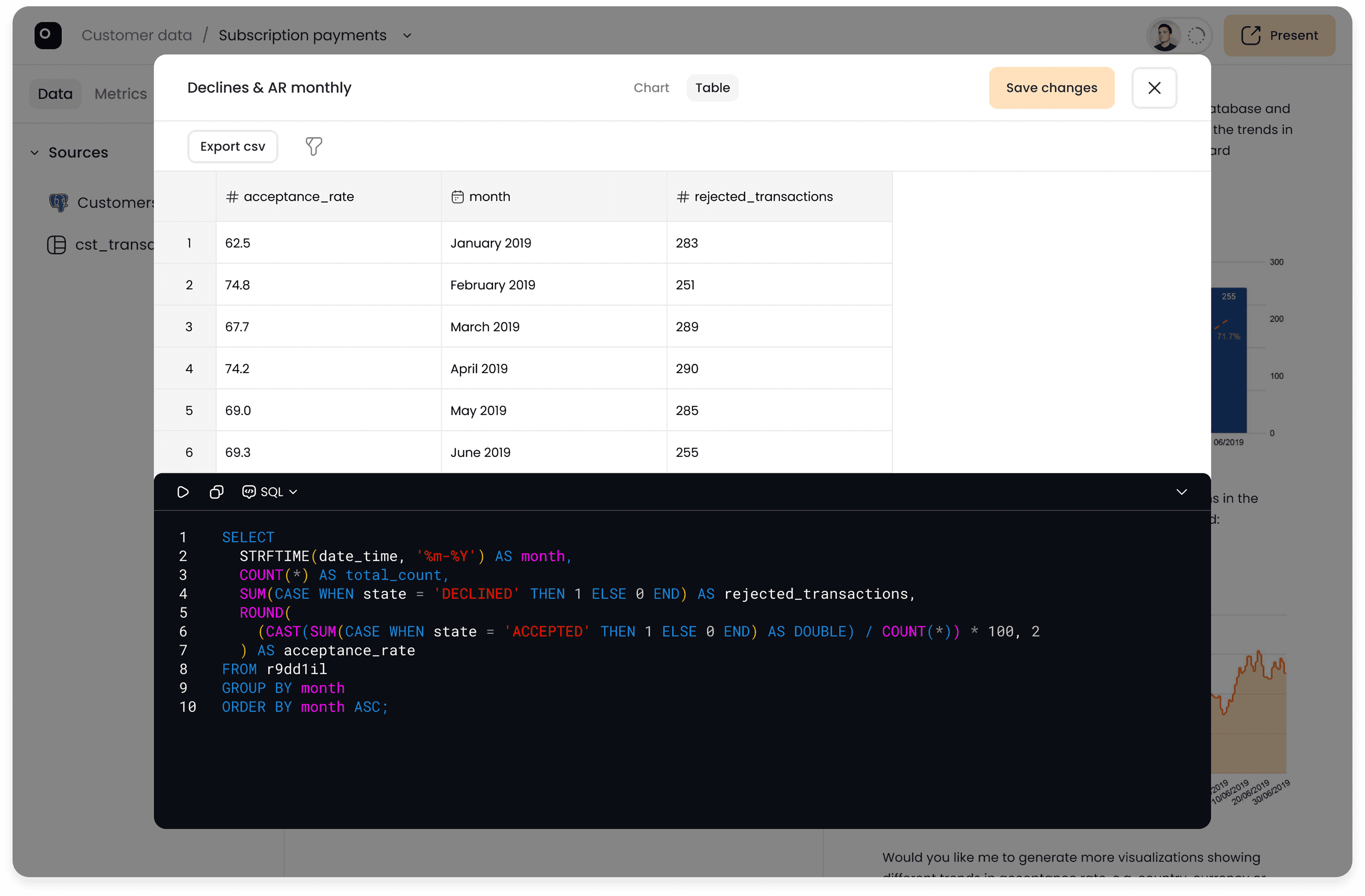

Simply type a question to explore your data, get insights and generate visualizations in seconds, not hours.

Answer complex ad-hoc questions

in minutes

Talk to your data

AI acts as a bridge between your data and product teams, making it easy for everyone to access and work with data

Full transparency

Stay in control and audit every step the AI takes to fulfill your requests

Safety first

We prioritize the security of your data. With the enterprise version of Prompt Studio, all your information stays securely in your cloud

Insights that matter

Move beyond rigid and predefined dashboards and quickly answer most complex or ad hoc data questions

Seamless collaboration

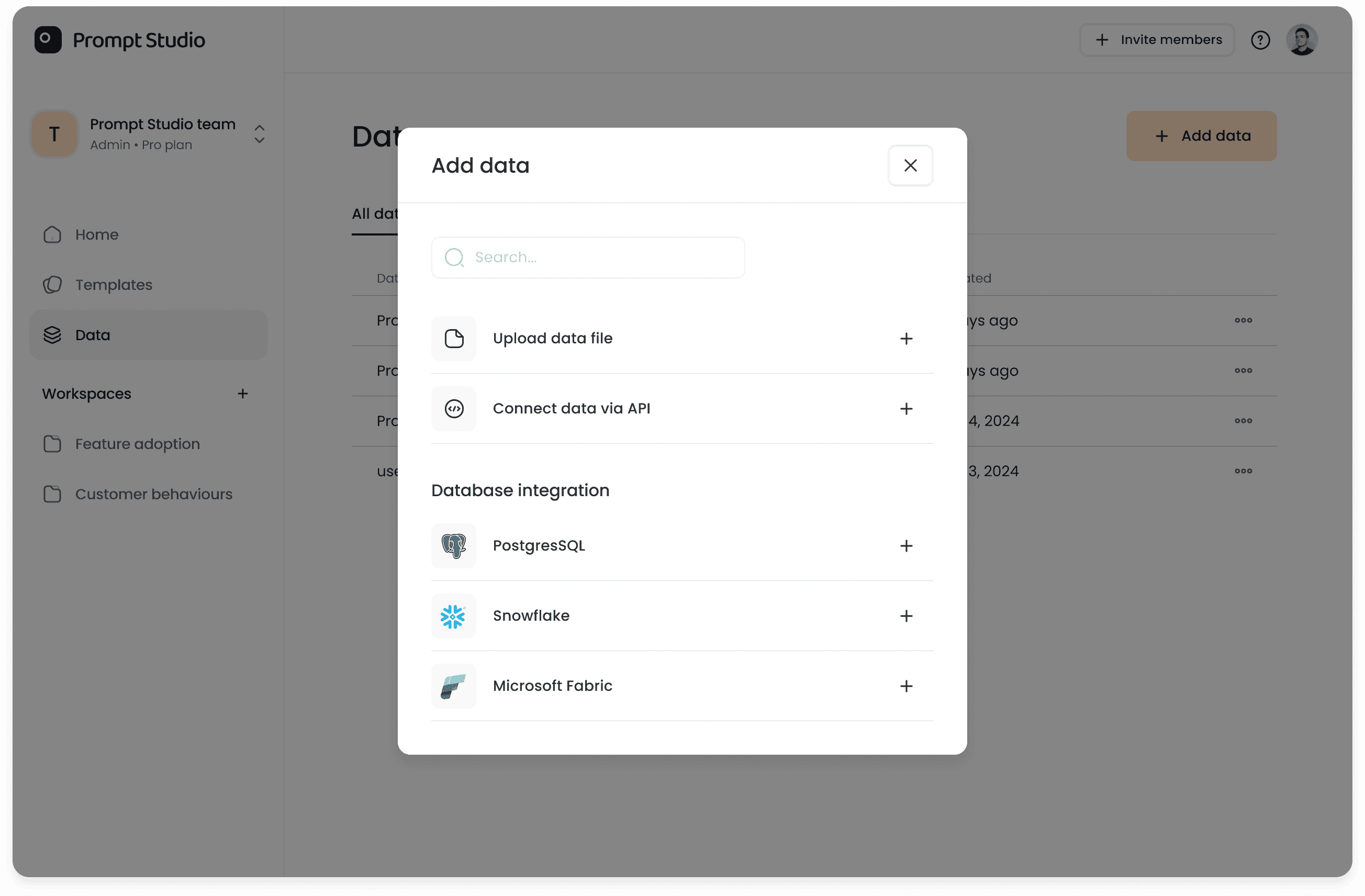

Work together in Promptbooks, create teams, manage permissions, and share presentation-ready insights with external collaborators

No code required

Your entire team can generate insights and visualizations from the data without writing a single line of code

Use Cases

Self-service analytics

in your organization

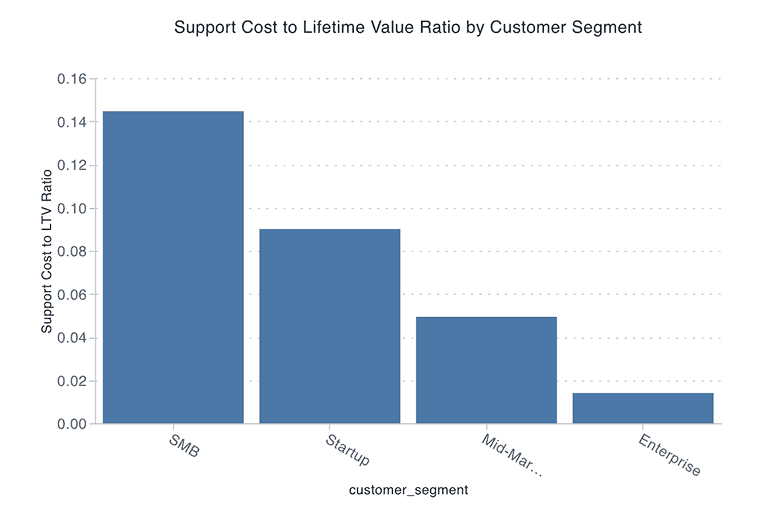

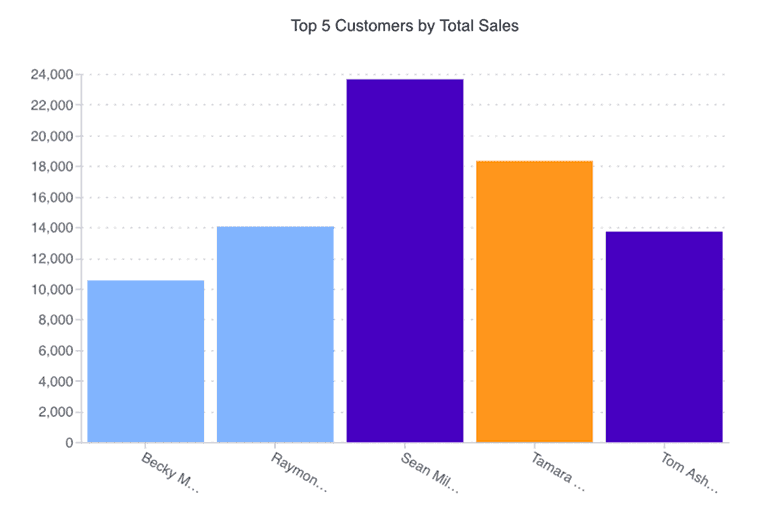

Sales

Superstore Sales Dataset

Sales data including information on products, orders and customers.

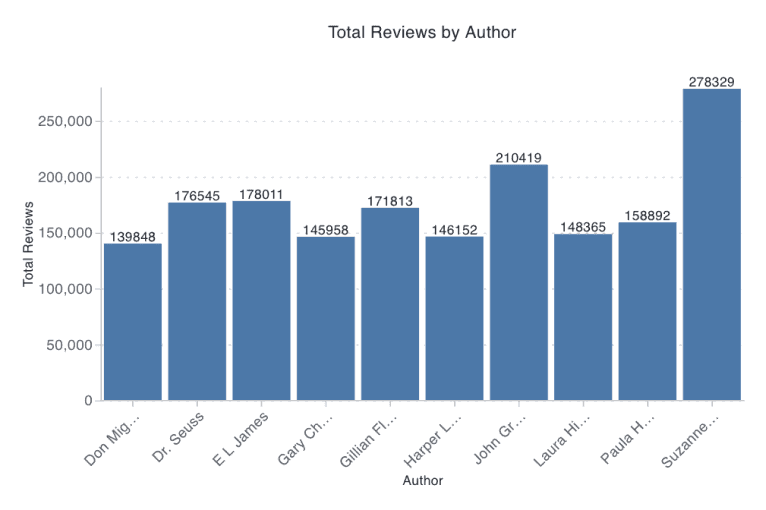

Sales

50 Bestselling Books

Amazon top 50 selling books for each year between 2009 and 2019.